Newer versions of Airflow use decorators ( to accomplish the same thing. py file that contains the logic for the DAG has a DAG context manager definition you can see the one below in the line with DAG('example_dag'. As we get here, we'll not have any additional dependencies the only one present will be apache-airflow.ĭAGs placed in the /dags directory will automatically appear in the Airflow UI. You can add additional packages to this file as needed. You will also find a requirements.txt file in the folder that contains all the python packages you'll need to run your DAGs. Using docker build, users can create an automated build that executes several command-line instructions in succession. The Dockerfile is a text file that contains all the commands a user could call on the command line to assemble an image. This file is used to build the docker image you will use to run the Airflow instance. The most important file is the Dockerfile.

Run the command astro dev init, creating a new project with a few files and folders. I called mine Astro in this example, but a more descriptive name for the job you're trying to run would be more appropriate. You can use the CLI to create new projects, deploy code, and manage users.Ĭreate a new folder for your project and launch your IDE of choice my tool is Visual Studio Code. The CLI is a command line tool that allows you to interact with the Astronomer service. Astronomer CLIįinally, let's get Astronomer CLI (Command Line Interface) installed. Note: Commercial use of Docker Desktop in larger enterprises (more than 250 employees OR more than $10 million in annual revenue) and government entities requires a paid subscription.

Apache airflow docker operator install#

Head to the Docker website and install Docker Desktop for your operating system. Airflow runs in docker containers and installs everything needed, such as a web server and a local database. Docker is extremely lightweight and powerful. Docker is a container management system that allows you to run virtual environments on local computers and in the cloud. If you don't have Homebrew installed, you can install it by visiting the Homebrew website and running the command they provide. Homebrew will be the easiest way for you to install the Astronomer CLI.

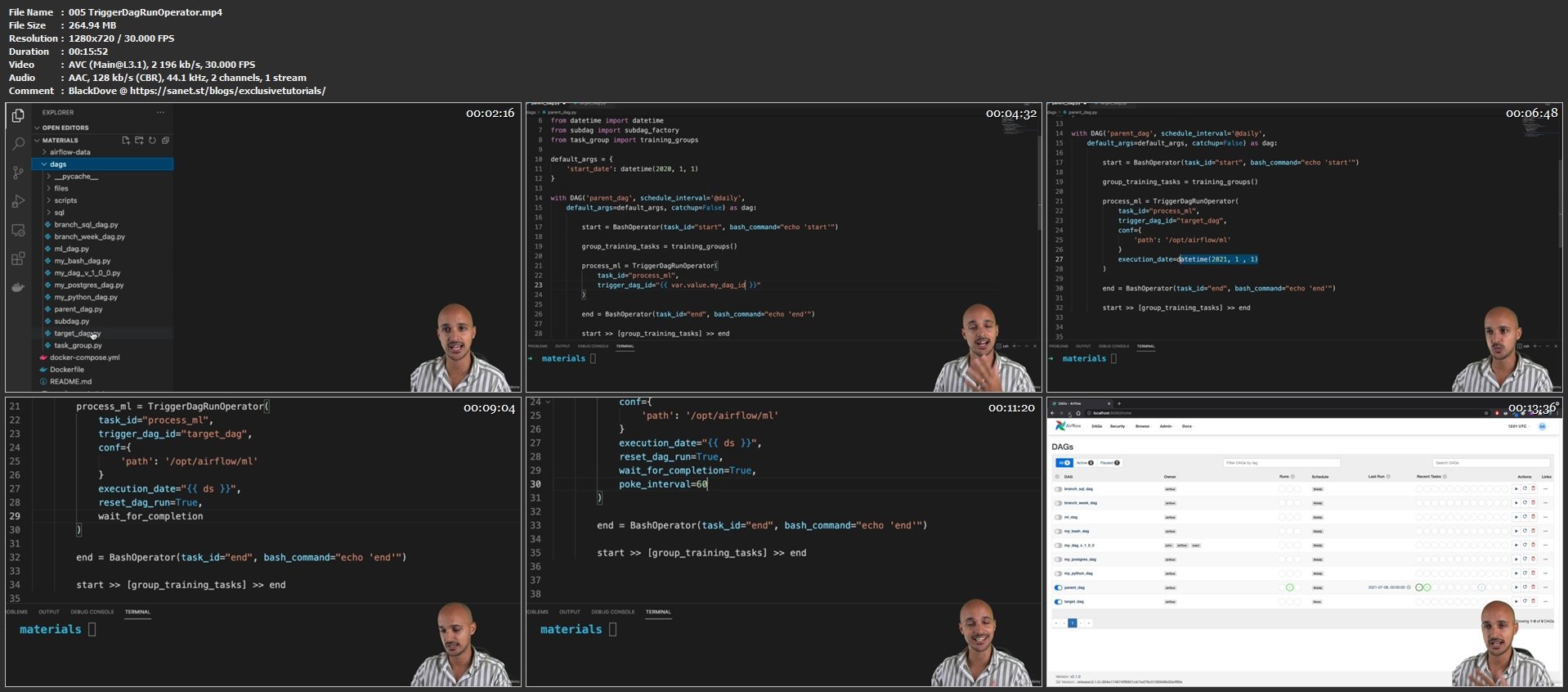

Apache airflow docker operator how to#

In this post, I'll walk through the basics of Airflow and how to get started with Astronomer. You can even leverage Airflow for Feature Engineering, where you apply data transformations in your Data Warehouse, creating new views of data. A common use case for Airflow is taking data from one source, transforming it over several steps, and loading it into a data warehouse. Astronomer is a managed Airflow service that allows you to orchestrate workflows in a cloud environment. The power of writing a DAG with Python means that you can leverage the powerful suite of Python libraries available to do nearly anything you want. One of the biggest advantages to Airflow, and why it is so popular, is that you write your configuration in Python in the form of what is referred to as a DAG ( Directed Acyclic Graph). Apache Airflow is an open-source workflow management platform that helps you build Data Engineering Pipelines.

0 kommentar(er)

0 kommentar(er)